Chapter 27

The Future of Machine Learning with Rust

"The future belongs to those who believe in the beauty of their dreams." — Eleanor Roosevelt

Chapter 27 of MLVR provides a visionary overview of how Rust will shape the future of machine learning. The chapter highlights Rust's unique advantages—such as memory safety, performance, and concurrency—that make it an ideal choice for building next-generation ML systems. It explores emerging trends like federated learning, quantum machine learning, and ethical AI, showing how Rust can address the challenges posed by these advancements. The chapter also delves into the intersection of Rust with AI, quantum computing, and real-time applications, illustrating how Rust is poised to become a foundational language in these domains. Practical guidance is provided on leveraging Rust’s capabilities to develop cutting-edge ML frameworks and tools, ensuring that readers are well-equipped to lead in the future of ML development. By the end of this chapter, readers will have a deep understanding of how Rust can drive innovation in machine learning, positioning themselves at the forefront of this transformative field.

27.1. Rust’s Role in the Evolution of Machine Learning

As we venture into the future of machine learning, it is essential to examine the current landscape of this rapidly evolving field and the unique contributions that Rust can make in addressing its challenges. The state of machine learning today is characterized by a growing demand for more efficient algorithms, the need for robust data processing capabilities, and the necessity for systems that can operate in real-time environments. Rust, with its distinctive features, emerges as a powerful ally in this journey.

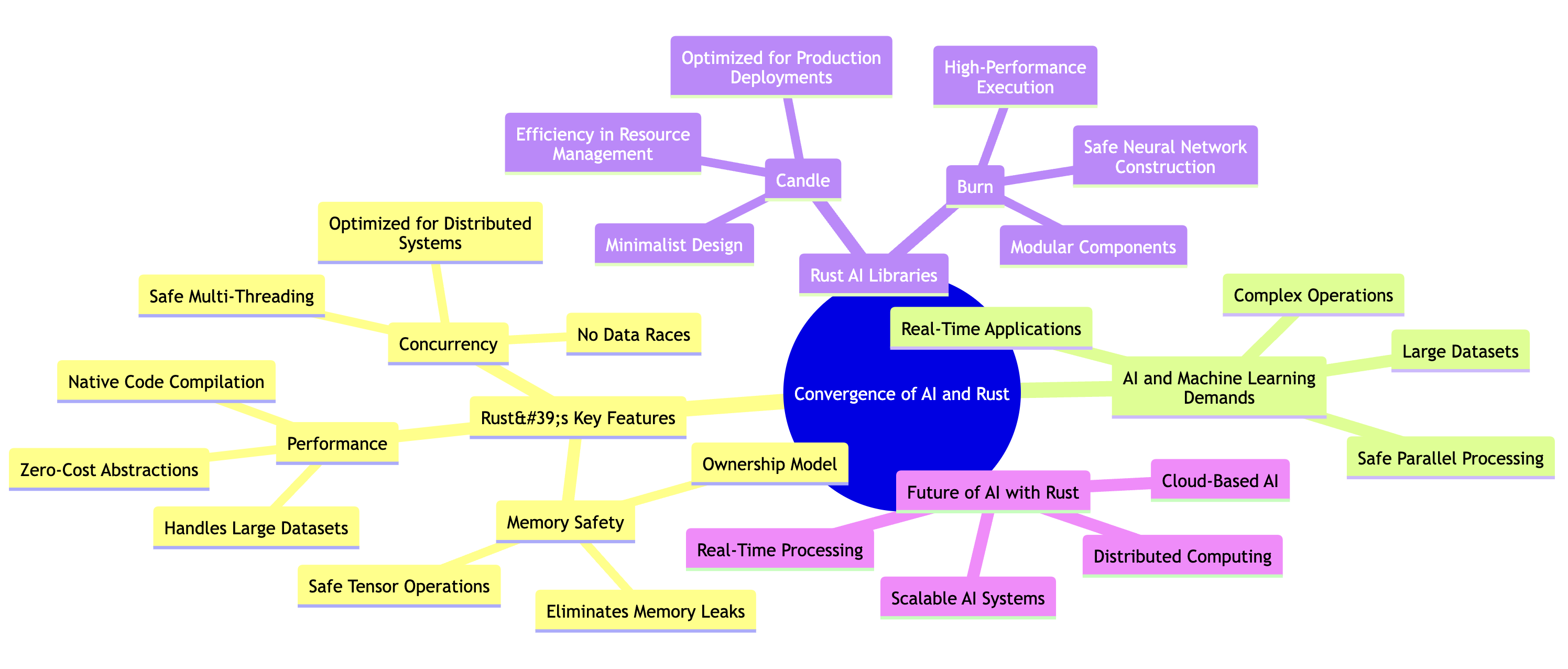

Figure 1: Potential Rust’s roles in evolution of Machine Learning.

Rust's design philosophy prioritizes performance, safety, and concurrency, making it particularly well-suited for machine learning applications that require high efficiency and reliability. The performance aspects of Rust are evident; it is a systems programming language that compiles to native code, allowing developers to write code that runs with the efficiency of C or C++. This is vital in machine learning, where the need for computational power is significant, particularly when handling large datasets or training complex models. Rust’s zero-cost abstractions enable developers to write high-level code without incurring performance penalties, which is especially beneficial for machine learning frameworks that demand both flexibility and speed.

Alongside performance, safety is another critical concern in machine learning, especially as models are deployed in production environments where errors can lead to significant consequences. Rust's ownership model and strict compile-time checks ensure memory safety, preventing common bugs such as null pointer dereferences and buffer overflows. These features help create more reliable systems that can handle the demands of machine learning applications without the risk of crashing or producing incorrect results due to unsafe memory practices. For instance, consider a scenario where a machine learning model processes sensitive data. Rust’s safety guarantees can help ensure that such data is handled correctly, thus maintaining integrity and confidentiality.

Furthermore, concurrency is an essential aspect of modern computing, particularly in machine learning where parallel processing can dramatically enhance performance. Rust’s concurrency model allows developers to write multi-threaded code that is safe from data races, thanks to its ownership rules. This capability is crucial as machine learning applications increasingly leverage multi-core processors and distributed computing environments. With the rise of cloud computing and distributed systems, Rust's ability to manage concurrent operations without compromising safety or performance positions it as a leading choice for future machine learning developments.

As we look ahead, it is clear that the future of machine learning will demand the ability to handle distributed computing and real-time applications with a high degree of reliability and efficiency. Rust's features align seamlessly with these needs. For example, in a distributed machine learning scenario, where models are trained over multiple nodes, Rust’s concurrency and memory safety will help manage the complexities of data sharing and synchronization between nodes, reducing the likelihood of errors and improving overall system robustness.

In terms of practical applications, several Rust-based machine learning frameworks and libraries have begun to emerge, showcasing the language's potential in this domain. One notable example is the Rust library called ndarray, which provides support for n-dimensional arrays and is crucial for numerical computing tasks commonly encountered in machine learning. Here is a simple example demonstrating how to use ndarray to perform basic operations:

use ndarray::Array2;

fn main() {

let a = Array2::<f64>::zeros((3, 3));

let b = Array2::<f64>::from_shape_vec((3, 3), vec![1.0, 2.0, 3.0, 4.0, 5.0, 6.0, 7.0, 8.0, 9.0]).unwrap();

let c = &a + &b; // Element-wise addition

println!("{:?}", c);

}

This snippet initializes a zero matrix and adds it to another matrix, demonstrating how ndarray can facilitate operations on multi-dimensional data structures, which is a fundamental aspect of machine learning.

Furthermore, libraries such as tch-rs, which provides Rust bindings for the popular PyTorch library, allow developers to build complex machine learning models while leveraging Rust's safety and performance features. Below is an example of building a simple neural network using tch-rs:

use tch::{nn::{self, Module, OptimizerConfig}, Device, Tensor};

fn main() {

let device = Device::cuda_if_available();

let vs = nn::VarStore::new(device);

let net = nn::seq()

.add(nn::linear(vs.root() / "layer1", 784, 128, Default::default()))

.add_fn(|xs| xs.relu())

.add(nn::linear(vs.root() / "layer2", 128, 10, Default::default()));

let optimizer = nn::Adam::default().build(&vs, 1e-3).unwrap();

// Example data

let input = Tensor::randn(&[64, 784], (tch::Kind::Float, device));

let output = net.forward(&input);

println!("{:?}", output);

}

In this example, we create a simple feedforward neural network with two layers using the tch-rs library. This showcases how Rust can be utilized to construct and train machine learning models while maintaining adherence to best practices in memory safety and concurrency.

In summary, Rust's role in the evolution of machine learning is poised to be significant. By addressing the fundamental challenges of performance, safety, and concurrency, Rust aligns well with the future needs of machine learning, particularly in the realms of distributed computing and real-time applications. With an emerging ecosystem of Rust-based ML frameworks and libraries, the potential for innovative solutions in machine learning is vast. As we continue to explore this exciting intersection of technology, Rust's contributions will likely shape the landscape of machine learning in the years to come.

27.2. Emerging Trends in Machine Learning with Rust

As machine learning continues to evolve, several emerging trends are shaping the landscape of this field. Among these trends are explainable AI, federated learning, AutoML, and edge computing. Each of these concepts addresses specific challenges and opens up new opportunities for innovation. In the context of Rust, a systems programming language known for its performance and safety, we can explore how these trends can be integrated and leveraged effectively.

Explainable AI (XAI) is gaining significant traction as the demand for transparency in machine learning models increases. As models become more complex, understanding how they make decisions becomes vital, particularly in fields like healthcare and finance. Rust's strong type system and memory safety features make it an excellent candidate for implementing XAI techniques. For instance, we can develop libraries that provide insights into model predictions through various interpretability methods. Below is a simple Rust example that demonstrates the use of a basic linear regression model and a method to compute feature importance.

use linregress::{FormulaRegressionBuilder, RegressionDataBuilder};

fn main() {

let y = vec![1., 2., 3., 4., 5.];

let x1 = vec![5., 4., 3., 2., 1.];

let x2 = vec![729.53, 439.0367, 42.054, 1., 0.];

let x3 = vec![258.589, 616.297, 215.061, 498.361, 0.];

let data = vec![("Y", y), ("X1", x1), ("X2", x2), ("X3", x3)];

let data = RegressionDataBuilder::new().build_from(data).unwrap();

let formula = "Y ~ X1 + X2 + X3";

let model = FormulaRegressionBuilder::new()

.data(&data)

.formula(formula)

.fit()

.unwrap();

let parameters: Vec<_> = model.iter_parameter_pairs().collect();

let pvalues: Vec<_> = model.iter_p_value_pairs().collect();

let standard_errors: Vec<_> = model.iter_se_pairs().collect();

println!("Parameters: {:?}", parameters);

println!("P Values: {:?}", pvalues);

println!("Std Error: {:?}", standard_errors);

}

Federated learning is another trend gaining momentum, particularly in scenarios where data privacy is paramount. This approach allows machine learning models to be trained across decentralized devices holding local data, thus preserving privacy. Rust's efficient concurrency model and low-level control over resources can facilitate the development of federated learning systems. By utilizing Rust’s async capabilities, we can build a framework that allows devices to compute model updates independently and securely. Below is a basic example illustrating how we could structure a federated learning setup in Rust, using asynchronous tasks to simulate local training.

// Append Cargo.toml

[dependencies]

futures = "0.3.31"

tokio = { version = "1.40.0", features = ["full"] }

use tokio::task;

async fn local_training(data: Vec<f64>) -> f64 {

// Simulate training on local data and return a model update

let model_update = data.iter().sum::<f64>() / data.len() as f64;

model_update

}

#[tokio::main]

async fn main() {

let devices_data = vec![

vec![1.0, 2.0, 3.0],

vec![4.0, 5.0, 6.0],

vec![7.0, 8.0, 9.0],

];

let mut tasks = vec![];

for data in devices_data {

tasks.push(task::spawn(local_training(data)));

}

let results: Vec<f64> = futures::future::join_all(tasks).await.into_iter().map(|r| r.unwrap()).collect();

println!("Model updates from devices: {:?}", results);

}

AutoML automates the process of applying machine learning to real-world problems, making it easier for non-experts to use powerful algorithms. Rust's performance and reliability can drive the development of AutoML tools that can automate hyperparameter tuning, feature selection, and model selection. By creating a Rust library that can interface with existing machine learning frameworks, we can streamline the AutoML process. An example of a simplified AutoML approach in Rust might involve automating the selection of the best model based on performance metrics.

// Pseudo-code for a simple AutoML framework in Rust

fn evaluate_model(model: &str, data: &Vec<f64>) -> f64 {

// Logic to evaluate the model on the given data

// This could involve cross-validation and returning a score

0.75 // Placeholder for evaluation score

}

fn auto_ml(data: Vec<f64>, models: Vec<&str>) -> &str {

let mut best_model = "";

let mut best_score = 0.0;

for model in models {

let score = evaluate_model(model, &data);

if score > best_score {

best_model = model;

best_score = score;

}

}

best_model

}

fn main() {

let data = vec![1.0, 2.0, 3.0, 4.0, 5.0];

let models = vec!["LinearRegression", "DecisionTree", "SVM"];

let best_model = auto_ml(data, models);

println!("Best model selected: {}", best_model);

}

Edge computing represents a paradigm shift in how data is processed and analyzed. By processing data closer to where it is generated, edge computing reduces latency and bandwidth use, making it ideal for real-time applications. Rust's ability to compile to small, efficient binaries is particularly beneficial for edge devices with limited resources. The following example illustrates how one could implement a basic machine learning inference model on an edge device using Rust.

fn predict(input: Vec<f64>) -> f64 {

// Placeholder for a machine learning model prediction

input.iter().sum()

}

fn main() {

let edge_device_input = vec![1.0, 2.0, 3.0];

let prediction = predict(edge_device_input);

println!("Prediction from edge device: {}", prediction);

}

In conclusion, Rust is uniquely positioned to contribute significantly to the emerging trends in machine learning. Its emphasis on security, efficiency, and cross-platform compatibility makes it an attractive choice for developing advanced ML techniques. By implementing emerging trends such as explainable AI, federated learning, AutoML, and edge computing in Rust, we can harness the language's strengths to address modern challenges in machine learning. As we move forward, Rust will likely play a pivotal role in shaping the future of machine learning applications, enabling developers to build robust, efficient, and secure systems.

27.3. The Intersection of Rust and Artificial Intelligence (AI)

The convergence of Artificial Intelligence (AI) and Rust marks a pivotal development in the creation of intelligent systems. As AI continues to expand across industries such as healthcare, finance, and autonomous systems, the demand for programming languages capable of managing AI’s complexity while ensuring safety and performance has become more urgent. Rust emerges as a strong contender due to its unique features, including memory safety, concurrency, and performance efficiency, making it particularly well-suited for AI and machine learning applications.

Figure 2: The convergence of AI and Rust ecosystem.

At the heart of this synergy between Rust and AI is the need for robust systems capable of handling complex operations without sacrificing performance or safety. Traditional programming languages like Python or C++ may encounter issues such as memory management problems, which can lead to bugs with serious consequences in AI applications. Rust addresses these concerns through its ownership model, which enforces strict memory access and modification rules, eliminating risks like memory leaks and undefined behavior. This is crucial in AI environments where data integrity, speed, and accuracy are paramount. For example, when building deep learning models, memory safety ensures that tensor operations—essential for neural networks—are performed reliably, without risks of invalid memory access.

Rust’s performance characteristics also contribute significantly to its suitability for AI. It compiles to native code, offering performance on par with C or C++, which is critical for handling large datasets and running computationally expensive machine learning models. Rust’s zero-cost abstractions further allow developers to write high-level code without incurring performance penalties, providing both flexibility and speed. This is essential in training deep learning models, where processing efficiency can drastically impact model performance and scalability.

Concurrency is another area where Rust excels, particularly as modern AI systems increasingly rely on multi-threading and distributed computing to handle parallel processing tasks. Rust’s concurrency model prevents data races and ensures safe multi-threaded execution, which is crucial for training large models or working in distributed environments. As AI continues to move toward real-time applications and cloud-based solutions, Rust’s concurrency features position it as a prime candidate for scaling AI systems efficiently and safely.

Moreover, the Rust ecosystem has grown to support deep learning through the development of powerful libraries such as Burn and Candle. Burn is a flexible deep learning framework written in Rust that provides modular components for building neural networks. It is designed to offer high-performance execution while maintaining the memory safety and concurrency features of Rust. Burn’s architecture allows developers to customize layers and operations while ensuring safe and efficient computations, making it ideal for building complex AI models.

Candle, another Rust-based deep learning library, emphasizes minimalism and efficiency. It is optimized for performance while keeping the design simple, allowing developers to build, train, and deploy deep learning models without the overhead often seen in more extensive frameworks. Candle is particularly well-suited for applications where performance and resource management are critical, making it a great choice for deploying models in production environments.

As we look to the future of AI development, Rust’s growing ecosystem of libraries, combined with its unique features, provides a compelling solution for developers who require both performance and safety in AI. With the addition of frameworks like Burn and Candle, Rust is positioned to play a central role in the next generation of deep learning and AI systems. Rust’s potential to handle distributed computing, real-time processing, and safe multi-threading sets it apart from traditional AI languages, offering a promising path forward for scalable, reliable, and efficient AI applications.

The basic benefits of using Rust extend beyond memory safety to include its performance characteristics. As AI models become increasingly complex and data-intensive, the need for efficient computation grows. Rust's zero-cost abstractions allow developers to write high-level code without sacrificing performance, making it an ideal choice for developing scalable AI solutions. For example, consider a scenario where an AI model requires intensive linear algebra operations. Rust's ecosystem includes libraries like nalgebra, which provide optimized implementations of mathematical operations. This enables developers to build high-performance AI applications while maintaining code clarity and safety. An example implementation might look like this:

use nalgebra as na;

use na::{DMatrix, DVector};

fn main() {

// Create a matrix and a vector

let matrix = DMatrix::from_row_slice(2, 2, &[1.0, 2.0, 3.0, 4.0]);

let vector = DVector::from_row_slice(&[1.0, 2.0]);

// Perform matrix-vector multiplication

let result = matrix * vector;

println!("Result of multiplication:\n{}", result);

}

In this example, Rust's type system and performance-oriented design allow developers to handle complex mathematical operations succinctly and safely. By using the nalgebra library, practitioners can implement sophisticated algorithms without delving into the intricacies of low-level memory management.

Moreover, practical applications of AI using Rust can be seen in various domains such as computer vision, natural language processing, and robotics. The growing ecosystem of AI libraries in Rust, such as tch-rs (a Rust binding for PyTorch) and rustlearn, is a testament to the community's commitment to building reliable AI systems. A practical implementation of a simple linear regression model in Rust can be achieved using the rustlearn library, showcasing how accessible AI development can be in Rust:

use rustlearn::datasets::iris;

use rustlearn::linear_models::sgdclassifier::Hyperparameters;

use rustlearn::prelude::*;

fn main() {

let (X, y) = iris::load_data();

let mut model = Hyperparameters::new(4)

.learning_rate(1.0)

.l2_penalty(0.5)

.l1_penalty(0.0)

.one_vs_rest();

let num_epochs = 20;

for _ in 0..num_epochs {

model.fit(&X, &y).unwrap();

}

let prediction = model.predict(&X).unwrap();

println!("Predictions: {:?}", prediction);

}

This code snippet illustrates how straightforward it can be to implement machine learning algorithms in Rust, harnessing its strengths to create scalable and efficient AI applications. The use of high-level abstractions in conjunction with Rust's performance guarantees empowers developers to focus on algorithmic innovation rather than the underlying technical complexities.

In conclusion, as we look to the future of machine learning and AI development, Rust stands out as a language that can meet the challenges posed by this rapidly evolving field. Its ability to manage complex systems safely and efficiently positions Rust as a potential leader in AI programming. As more developers recognize the advantages of Rust, we can anticipate a surge in the creation of robust, scalable AI solutions that harness the power of this remarkable language. The intersection of Rust and AI is not merely a theoretical concept; it is a practical reality that holds immense promise for the future of intelligent systems.

27.4. Rust in the Context of Quantum Machine Learning

As discussed in Chapter 26, Quantum Machine Learning (QML) represents a fascinating intersection between quantum computing and machine learning, leveraging the principles of quantum mechanics to enhance the capabilities of traditional machine learning algorithms. As we delve into the future of machine learning with Rust, it is essential to explore how this systems programming language can facilitate the development of quantum computing applications, thereby enriching the field of QML. Rust's emphasis on safety, concurrency, and performance makes it a compelling choice for implementing quantum algorithms, enabling researchers and developers to harness the power of quantum computing effectively.

At its core, quantum machine learning seeks to exploit quantum phenomena such as superposition and entanglement to improve the efficiency and effectiveness of machine learning tasks. For instance, quantum algorithms can potentially process large datasets exponentially faster than classical algorithms, revolutionizing areas such as data classification, clustering, and regression. Rust, with its strong type system and memory safety guarantees, can play a pivotal role in developing robust quantum computing applications. The language's ability to manage performance-critical tasks without sacrificing safety ensures that quantum algorithms can be implemented with precision and reliability, paving the way for innovative QML solutions.

One of the fundamental ideas underpinning Rust's utility in quantum machine learning is the language's support for functional programming paradigms. Quantum computing often involves operations on quantum states which can be naturally expressed using functional programming concepts. Rust’s first-class functions and powerful abstractions allow developers to create reusable components that model quantum gates and circuits. By utilizing Rust's traits and generics, practitioners can implement quantum algorithms in a modular fashion, enabling greater flexibility and maintainability in the codebase. For example, consider the implementation of a simple quantum gate in Rust, which could serve as a foundational building block for more complex quantum circuits.

trait QuantumGate {

fn apply(&self, state: &mut Vec<f64>);

}

struct Hadamard;

impl QuantumGate for Hadamard {

fn apply(&self, state: &mut Vec<f64>) {

let temp = state.clone();

let n = state.len();

for i in 0..n {

state[i] = (temp[i] + temp[(i ^ 1)]) / f64::sqrt(2.0);

}

}

}

In this example, we define a QuantumGate trait that requires an apply method to modify a quantum state. The Hadamard struct implements this trait, demonstrating how Rust's type system can encapsulate quantum operations, providing a clear interface for other quantum gates to follow suit. By establishing such abstractions, Rust enables efficient composition of quantum algorithms, thereby enhancing the development of quantum machine learning applications.

Integrating Rust with classical machine learning systems can significantly enhance the performance and reliability of hybrid models that utilize both classical and quantum computing paradigms. For instance, a practical implementation might involve using Rust to manage data preprocessing and classical feature extraction while offloading specific computational tasks to a quantum processor. This synergy allows researchers to leverage the strengths of both computing approaches, optimizing the overall machine learning workflow. Rust's concurrency model further aids in this integration, allowing for efficient parallel execution of classical algorithms while preparing data to be sent to quantum processors.

To illustrate this integration, consider a scenario where we extract features from a dataset using Rust, subsequently passing these features to a quantum algorithm for classification. The following snippet demonstrates how we might prepare a dataset and utilize a quantum classifier:

fn extract_features(data: &Vec<Vec<f64>>) -> Vec<f64> {

// A simple feature extraction function

data.iter()

.map(|row| row.iter().sum()) // Summing features for simplicity

.collect()

}

fn classify_with_quantum(features: &Vec<f64>) -> Vec<u8> {

// Placeholder for quantum classification logic

features.iter().map(|_| 0).collect() // Dummy classification

}

fn main() {

let data: Vec<Vec<f64>> = vec![

vec![0.1, 0.2, 0.3],

vec![0.4, 0.5, 0.6],

];

let features = extract_features(&data);

let classifications = classify_with_quantum(&features);

println!("{:?}", classifications);

}

In this code, we first define a simple feature extraction function that sums the features of each data instance. Subsequently, we have a placeholder for a quantum classification function, which would ideally invoke a quantum algorithm to classify the prepared features. This example emphasizes how Rust can effectively bridge classical and quantum machine learning efforts, creating a seamless workflow that enhances the efficacy of the overall system.

Looking to the future, the potential of quantum machine learning combined with Rust’s capabilities suggests promising avenues for research and application development. As quantum hardware matures and becomes more accessible, we anticipate an increasing demand for tools and frameworks that facilitate quantum programming. Rust's performance and safety characteristics position it uniquely to contribute to the development of these tools, fostering a robust ecosystem for QML. The community-driven projects, such as qiskit-rust or others that might emerge, will likely benefit from Rust’s features, allowing developers to build sophisticated quantum algorithms and integrate them with existing classical machine learning frameworks.

In conclusion, the relationship between Rust and quantum machine learning is poised to grow as the field of quantum computing continues to evolve. Rust offers a robust foundation for developing quantum algorithms, enabling researchers to explore the vast potential of quantum computing in enhancing machine learning tasks. By leveraging Rust's unique features, we can expect to see innovative QML applications that not only push the boundaries of what is possible with machine learning but also advance our understanding of quantum phenomena in computational contexts. As we forge ahead, it is essential for developers and researchers to embrace the opportunities presented by this exciting fusion of technologies, driving forward the future of machine learning with Rust.

27.5. Rust for Federated and Privacy-Preserving Machine Learning

In recent years, the demand for privacy-preserving machine learning techniques has surged in response to growing concerns about data security and user privacy. As discussed in Chapter 24, Federated learning, a decentralized approach to training machine learning models, allows for collaborative learning without the need to share raw data between participants. This technique is particularly valuable in scenarios where data sensitivity is a concern, such as healthcare, finance, and personal user data. Rust, known for its emphasis on safety and performance, presents an ideal programming environment for developing federated learning systems that prioritize privacy.

At the core of federated learning is the idea that data remains on the devices where it is generated. Instead of transferring sensitive data to a central server, the model is trained locally on each device, and only the model updates are sent to the central server. This approach reduces the risk of exposing sensitive information, as the raw data never leaves the device. Rust's features, including ownership and borrowing, help ensure that data is handled securely and efficiently, making it an excellent choice for implementing such systems. Furthermore, Rust's strong type system can help prevent many common programming errors that could lead to security vulnerabilities, such as buffer overflows and null pointer dereferences.

However, federated learning also presents several challenges, particularly in the realms of scalability, communication efficiency, and the inherent complexity of managing model updates from multiple sources. In a federated learning scenario, devices may have heterogeneous data distributions, which can lead to challenges in model convergence and performance. Moreover, ensuring the integrity of model updates while maintaining user privacy is a significant concern. Rust's safety guarantees, such as preventing data races and ensuring memory safety, can mitigate these risks by enforcing strict rules around concurrent access and data management. By leveraging Rust's capabilities, developers can create robust federated learning systems that are less susceptible to security threats.

To illustrate the practical application of federated learning in Rust, consider a scenario where we are developing a simple federated learning framework. In this example, we'll create a basic structure for handling local model training and securely aggregating updates from multiple clients. The following code snippet outlines a framework for federated learning that includes both local training and update aggregation.

use std::sync::{Arc, Mutex};

use std::thread;

struct LocalModel {

weights: Vec<f32>,

}

impl LocalModel {

fn new() -> LocalModel {

LocalModel { weights: vec![0.0; 10] } // Initialize with 10 weights

}

fn train(&mut self, data: &[f32]) {

// Dummy training logic - adjust weights based on local data

for (i, &value) in data.iter().enumerate() {

self.weights[i] += value * 0.1; // Simple gradient step

}

}

fn get_weights(&self) -> Vec<f32> {

self.weights.clone()

}

}

struct FederatedLearning {

models: Vec<Arc<Mutex<LocalModel>>>,

}

impl FederatedLearning {

fn new(num_clients: usize) -> FederatedLearning {

let models = (0..num_clients)

.map(|_| Arc::new(Mutex::new(LocalModel::new())))

.collect();

FederatedLearning { models }

}

fn train_clients(&self, data: Vec<Vec<f32>>) {

let mut handles = vec![];

for (i, model) in self.models.iter().enumerate() {

let model_clone = Arc::clone(model);

let local_data = data[i].clone();

let handle = thread::spawn(move || {

let mut m = model_clone.lock().unwrap();

m.train(&local_data);

});

handles.push(handle);

}

for handle in handles {

handle.join().unwrap();

}

}

fn aggregate_updates(&self) -> Vec<f32> {

let mut aggregated_weights = vec![0.0; 10];

let client_count = self.models.len() as f32;

for model in &self.models {

let m = model.lock().unwrap();

for (i, &weight) in m.get_weights().iter().enumerate() {

aggregated_weights[i] += weight / client_count; // Simple averaging

}

}

aggregated_weights

}

}

fn main() {

let federated_learning = FederatedLearning::new(3);

let client_data = vec![vec![1.0, 0.5, 0.2, 0.1, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0],

vec![0.0, 0.1, 0.4, 0.6, 0.5, 0.3, 0.0, 0.0, 0.0, 0.0],

vec![0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.2, 0.5, 0.3, 0.1]];

federated_learning.train_clients(client_data);

let aggregated_weights = federated_learning.aggregate_updates();

println!("Aggregated weights: {:?}", aggregated_weights);

}

In this code, we define a LocalModel struct that represents the model being trained on each client's data. The train method simulates a simple training process, and get_weights retrieves the current model weights. The FederatedLearning struct manages multiple LocalModel instances and facilitates training across clients. The train_clients method spawns threads to train each client's model concurrently, while the aggregate_updates method combines the weights from all models through a simple averaging process.

This example demonstrates the foundational concepts of federated learning in Rust, highlighting how Rust's concurrency model, memory safety, and type system contribute to the development of secure and efficient machine learning applications. By utilizing Rust for federated and privacy-preserving machine learning, developers can create systems that not only respect user privacy but also provide the performance and reliability necessary for real-world applications. As the field of machine learning continues to evolve, Rust's unique features position it as a powerful tool for addressing the challenges of privacy and security in this domain.

27.6. Rust in Real-Time and Edge Computing for ML

The landscape of machine learning (ML) is evolving rapidly, with a significant push towards real-time applications, particularly in edge computing scenarios. As the demand for low-latency, high-performance ML applications grows, the need for programming languages that can efficiently handle such requirements has become evident. Rust, with its unique combination of performance, safety, and concurrency, stands out as a strong candidate for developing ML systems that operate in real-time and on edge devices.

In the realm of real-time ML applications, the ability to process data and make decisions swiftly is paramount. Edge computing, which brings computation and data storage closer to the location where it is needed, is particularly relevant in this context. It reduces latency, conserves bandwidth, and enhances data privacy. As devices become increasingly intelligent, the ability to deploy ML models directly on edge devices, such as IoT sensors, drones, and autonomous vehicles, has become essential. Rust's strong emphasis on performance makes it an excellent choice for these environments, where resources are often constrained, and performance is critical.

One of the core strengths of Rust lies in its performance characteristics. Rust compiles to native code and provides fine-grained control over system resources, enabling developers to write high-performance applications that can leverage the full capabilities of the underlying hardware. This is especially important for edge devices, which often have limited computational power compared to traditional servers. Furthermore, Rust's ownership model ensures memory safety without the need for garbage collection, significantly reducing the overhead typically associated with memory management in other languages. This is critical in real-time applications where predictable performance is necessary to meet strict latency requirements.

Concurrency is another area where Rust excels, making it particularly suitable for real-time ML applications. The ability to efficiently manage multiple threads and perform parallel computations is essential when working with data streams or when processing sensor data in real time. Rust's concurrency model, which is based on the concepts of ownership and borrowing, allows developers to write concurrent code that is safe and free from data races. This is particularly advantageous in edge computing scenarios where multiple sensors might be generating data simultaneously, and the application must process this data in real-time.

To illustrate the practical application of Rust in real-time ML scenarios, consider the development of a simple image classification model that runs on an edge device, such as a Raspberry Pi. Using a lightweight ML library like ndarray for numerical operations and tch-rs, a Rust binding for the PyTorch library, we can build a model that classifies images captured by a camera. The following example demonstrates how we can load a pre-trained model and run inference on an image in real-time.

use std::time::Instant;

use image::DynamicImage;

use tch::{nn::{self, ModuleT}, vision, Device, Tensor};

fn preprocess_image(image: DynamicImage) -> Tensor {

// Resize, normalize, and convert the image to Tensor

let resized_image = image.resize(224, 224, image::imageops::FilterType::Nearest);

let rgb_image = resized_image.to_rgb8();

let tensor_image = Tensor::from_data_size(&rgb_image, &[1, 224, 224, 3], tch::Kind::Uint8)

.to_kind(tch::Kind::Float)

.permute(&[0, 3, 1, 2]); // Channels first

tensor_image / 255.0 // Normalize the image

}

// Dummy function for capturing image

fn capture_image_from_camera() -> DynamicImage {

DynamicImage::new_rgb8(224, 224) // Returning a dummy image

}

fn main() {

// Load a pre-trained ML model

let device = Device::cuda_if_available();

let vs = nn::VarStore::new(device);

let model = vision::vgg::vgg16(&vs.root(), 1000); // Load VGG16 model with 1000 classes (ImageNet)

// Capture an image from the camera (replace with actual camera logic)

let image: DynamicImage = capture_image_from_camera();

// Preprocess the image

let image_tensor = preprocess_image(image).to(device).reshape(&[1, 3, 224, 224]); // Use reshape instead of view

// Measure inference time

let start = Instant::now();

let output = model.forward_t(&image_tensor, true);

let duration = start.elapsed();

// Process output and make decisions

let prediction = output.argmax(1, false);

println!("Predicted class: {:?}", prediction);

println!("Inference time: {:?}", duration);

}

In this example, we first load a pre-trained model. Next, we capture an image from a camera, which is a typical operation in edge computing. The image is then preprocessed to fit the model's input requirements. We measure the inference time to ensure that it meets the real-time constraints. Finally, the model's output is processed to determine the classification result. This straightforward illustration highlights how Rust can be employed to create efficient, real-time ML applications suitable for deployment on edge devices.

Moreover, the integration of Rust with existing ML frameworks and libraries allows developers to leverage the vast ecosystem of tools while still benefiting from Rust's performance and safety. The growing community around Rust for ML, along with the development of specialized libraries and frameworks, is paving the way for its adoption in real-time applications across various industries, including healthcare, automotive, and smart home technologies.

In conclusion, Rust's performance, safety, and concurrency make it a compelling choice for developing real-time ML applications that operate at the edge. As the demand for intelligent edge devices continues to rise, the utilization of Rust in this domain will likely expand, enabling developers to build robust, efficient, and safe ML solutions that meet the stringent requirements of modern applications. By harnessing the power of Rust, engineers can create systems that not only deliver high performance but also maintain the integrity and safety of their applications in a rapidly evolving technological landscape.

27.7. The Future of ML Frameworks and Libraries in Rust

As we delve into the future of machine learning (ML) frameworks and libraries in Rust, we must first acknowledge the remarkable evolution of Rust as a programming language. Over the past few years, Rust has gained significant traction in various domains, including systems programming, web development, and more prominently, machine learning. The language's emphasis on safety, concurrency, and performance has made it an attractive choice for developers looking to create robust and efficient ML applications. The emergence of Rust-based ML frameworks and libraries marks a pivotal moment in the landscape of ML development, potentially reshaping how we approach machine learning projects.

The evolution of Rust-based ML frameworks has been multifaceted. Initially, the community's efforts were centered around creating basic libraries that offered foundational functionalities like linear regression or decision trees. However, as the community has grown, so has the complexity and richness of the available frameworks. For instance, libraries such as ndarray have emerged to provide powerful N-dimensional array capabilities, enabling developers to manipulate large datasets efficiently. Furthermore, frameworks like tch-rs, that provide Rust bindings for PyTorch, allow Rust developers to leverage the extensive features of established ML models while maintaining the performance and safety guarantees that Rust offers. This evolution is not just limited to existing frameworks but extends to the development of new libraries that cater to specific ML tasks, such as natural language processing or computer vision, presenting an exciting future for ML development in Rust.

The impact of these advancements on the future of ML development cannot be overstated. With Rust's ability to produce highly optimized binaries, ML models can be deployed in resource-constrained environments, such as IoT devices or edge computing scenarios, without sacrificing performance. This capability opens doors for real-time machine learning applications that require swift inference times and lower latency. Additionally, as the Rust ecosystem continues to mature, we can expect to see a stronger focus on interoperability, where Rust-based libraries can seamlessly integrate with those written in other languages. This will facilitate the adoption of Rust within existing ML workflows, allowing developers to harness Rust's advantages while still utilizing their preferred tools.

Community-driven development and open-source contributions are vital components in shaping the Rust ecosystem for machine learning. The collaborative nature of open-source projects fosters innovation and rapid iteration, allowing developers to build upon each other's work and share knowledge across the community. This environment encourages contributions from a diverse pool of talent, resulting in a more robust and feature-rich set of libraries. For example, developers can enhance existing libraries by adding new algorithms or improving performance, which not only benefits their own projects but also enriches the entire community. Engaging with the community through forums, GitHub repositories, and online meetups not only helps maintain momentum but also creates a sense of ownership and pride among contributors, further driving the evolution of ML in Rust.

For those looking to contribute to or create Rust-based ML libraries, best practices are essential for ensuring scalability and efficiency. One approach to building scalable ML tools in Rust is to leverage Rust's ownership model, which allows for fine-grained memory management and zero-cost abstractions. This enables developers to create libraries that can efficiently handle large datasets while preventing common pitfalls associated with memory leaks or data races. Additionally, adopting a modular design pattern can enhance the flexibility and usability of ML libraries. By breaking down functionalities into smaller, reusable components, developers can create a library that is easier to maintain and extend.

Here is a simple example of a Rust-based linear regression implementation that demonstrates some of these principles. The code highlights the use of the ndarray library for matrix operations, which is critical for efficient computations in machine learning.

// Append Cargo.toml

[dependencies]

ndarray = "0.15.0"

ndarray-linalg = "0.15.0"

use ndarray;

use ndarray::{Array, Array2};

use ndarray_linalg::Inverse;

struct LinearRegression {

weights: Array2<f64>,

}

impl LinearRegression {

pub fn new(features: usize) -> Self {

LinearRegression {

weights: Array::zeros((features, 1)),

}

}

pub fn fit(&mut self, x: &Array2<f64>, y: &Array2<f64>) {

// Here we would implement the training logic, for illustration purposes

// we will use a simple least squares solution.

let x_transpose = x.t();

let x_transpose_x = x_transpose.dot(x);

let x_transpose_y = x_transpose.dot(y);

self.weights = x_transpose_x.inv().unwrap().dot(&x_transpose_y);

}

pub fn predict(&self, x: &Array2<f64>) -> Array2<f64> {

x.dot(&self.weights)

}

}

// Usage

fn main() {

let x = Array::from_shape_vec((4, 2), vec![1.0, 1.0, 1.0, 2.0, 1.0, 3.0, 1.0, 4.0]).unwrap();

let y = Array::from_shape_vec((4, 1), vec![1.0, 2.0, 3.0, 4.0]).unwrap();

let mut model = LinearRegression::new(2);

model.fit(&x, &y);

let predictions = model.predict(&x);

println!("{:?}", predictions);

}

This simple linear regression implementation serves as a starting point for understanding how to structure an ML library in Rust. By focusing on modularization, performance, and efficient data handling, developers can create powerful tools that not only perform well but also adhere to best practices within the Rust ecosystem.

In conclusion, the future of machine learning frameworks and libraries in Rust is bright, driven by a strong community, evolving technologies, and a focus on performance and safety. As more developers recognize the benefits of Rust for ML applications, we can expect to see an increase in open-source contributions, leading to the development of robust, scalable, and efficient libraries. By embracing best practices and fostering collaboration, the Rust community is poised to make significant strides in the ML domain, ultimately contributing to the advancement of machine learning technology as a whole.

27.8. Rust and Ethical AI Development

The rapid evolution of artificial intelligence (AI) technologies has brought to the forefront the critical necessity of integrating ethical considerations into AI development. As the capabilities of AI systems expand, so does the potential for unintended consequences, including bias, discrimination, and a lack of accountability. In this context, Rust, with its emphasis on safety, concurrency, and performance, offers a unique framework for building ethical AI systems. Rust's design principles align well with the fundamental ideas of ethical AI development, addressing concerns such as fairness, accountability, and transparency.

At the core of ethical AI development lies the principle of fairness. Fairness implies that AI systems should not propagate or exacerbate existing societal biases. Rust's type system and ownership model provide a strong foundation for creating robust, reliable code that can help mitigate bias. For instance, when developing machine learning models in Rust, one can leverage these features to ensure that data preprocessing steps are conducted transparently and systematically. By enforcing strict type checks and ownership rules, Rust allows developers to create data pipelines that are less prone to errors that may lead to biased outcomes. Consider the following example where we define a struct for a dataset that includes a field for sensitive attributes, such as gender or race, which we will handle with care:

#[derive(Debug)]

struct DataPoint {

id: u32,

features: Vec<f64>,

sensitive_attribute: Option<String>, // Sensitive attributes should be handled cautiously

}

fn preprocess_data(data: &mut Vec<DataPoint>) {

// Example of bias detection during preprocessing

let mut counts = std::collections::HashMap::new();

for point in data.iter() {

if let Some(ref attr) = point.sensitive_attribute {

*counts.entry(attr.clone()).or_insert(0) += 1;

}

}

println!("{:?}", counts); // Outputs counts for sensitive attributes

}

In this code snippet, we create a DataPoint struct, which includes a sensitive attribute that we consciously choose to handle with caution. The preprocess_data function counts occurrences of each sensitive attribute, which can be a starting point for identifying potential biases in the dataset. This proactive approach aligns with the ethical necessity to evaluate the fairness of AI systems.

Accountability is another critical aspect of ethical AI development. Rust’s emphasis on memory safety and its ownership model inherently introduce a level of accountability by preventing common programming errors that can lead to vulnerabilities and unpredictable behaviors in AI systems. Furthermore, Rust's community and ecosystem encourage best practices, which can be applied to create models that not only function effectively but also adhere to ethical standards. For example, developers can implement logging mechanisms to track the decision-making process of their models, ensuring that all actions taken by the AI can be scrutinized and understood:

use std::fs::OpenOptions;

use std::io::Write;

fn log_decision(decision: &str) {

let mut file = OpenOptions::new()

.write(true)

.append(true)

.open("decision_log.txt")

.expect("Unable to open file");

writeln!(file, "{}", decision).expect("Unable to write data");

}

In this function, we create a simple logging mechanism that appends decisions made by the AI system to a log file. Such transparency in the decision-making process fosters a culture of accountability and allows stakeholders to understand how and why specific outcomes were reached.

Transparency is also paramount in ethical AI development. Rust can contribute to transparency through its support for explainability features in AI models. Explainability allows users and stakeholders to comprehend how AI systems arrive at particular decisions, which is crucial for building trust. By using libraries such as ndarray for numerical operations and linfa for machine learning, developers can create models that are not only performant but also interpretable. For instance, one might build a linear regression model and provide insights into the contributions of various features:

use linfa::prelude::*;

use linfa_linear::LinearRegression;

use ndarray::{Array1, Array2};

fn train_model(features: Array2<f64>, targets: Array1<f64>) {

let dataset = Dataset::new(features, targets);

let model = LinearRegression::default().fit(&dataset).expect("Failed to fit model");

println!("Model parameters: {:?}", model.params());

}

fn main() {

// Example data

let features = Array2::from_shape_vec((3, 2), vec![1.0, 2.0, 3.0, 4.0, 5.0, 6.0]).unwrap();

let targets = Array1::from_vec(vec![1.0, 2.0, 3.0]);

train_model(features, targets);

}

In this example, we define a function to train a linear regression model, outputting the parameters that represent the contribution of each feature. Such interpretability is essential for users to assess the fairness and reliability of the model's predictions.

In conclusion, Rust's unique features and principles provide a strong foundation for addressing the ethical challenges that arise in AI development. By emphasizing fairness, accountability, and transparency, developers can leverage Rust to build AI systems that not only perform well but also adhere to ethical standards. As we continue to navigate the complexities of AI technologies, the integration of ethical considerations into our development practices will be paramount, and Rust is well-positioned to support these endeavors.

27.9. Preparing for the Future of ML with Rust

As we stand at the crossroads of technology and innovation, the future of Machine Learning (ML) with Rust is ripe with potential. Developers looking to navigate this evolving landscape need to focus on acquiring fundamental skills and knowledge areas that will be foundational in mastering ML in Rust. Understanding core programming paradigms, especially in the context of Rust’s ownership model, will be crucial. Developers should dive deep into the principles of systems programming, concurrency, and memory safety, as these aspects are integral to building efficient and robust ML applications. For instance, Rust's unique features like zero-cost abstractions and fearless concurrency allow developers to leverage computational resources effectively. As an illustration, consider a simple Rust program that utilizes parallel processing to enhance model training efficiency:

use rayon::prelude::*;

fn parallel_training(data: Vec<f64>) -> Vec<f64> {

data.par_iter()

.map(|&x| x * 2.0) // Sample operation to mimic training

.collect()

}

fn main() {

let training_data: Vec<f64> = (0..1_000_000).map(|x| x as f64).collect();

let results = parallel_training(training_data);

println!("Training results: {:?}", &results[..10]);

}

In addition to foundational skills, conceptual ideas play a pivotal role in shaping a developer's journey in ML with Rust. The landscape of machine learning is constantly changing, with new algorithms, frameworks, and best practices emerging at a rapid pace. Continuous learning and adaptation are key to staying relevant in this field. Developers should cultivate a mindset geared towards lifelong learning, embracing new technologies, methodologies, and tools as they arise. For example, immersing oneself in Rust's growing ecosystem of ML libraries, such as ndarray for numerical computing and tch-rs for accessing PyTorch functionalities, can provide significant advantages. Regularly engaging with online courses, webinars, and conferences specifically focused on Rust and ML can also foster a habit of learning and adaptation.

When it comes to practical ideas, it is essential to identify the resources, tools, and communities that can aid in keeping abreast of advancements in Rust and ML. The Rust community is vibrant and welcoming, making it easier for developers to find support and share knowledge. Platforms such as the Rust Users Forum and dedicated sections on GitHub can be invaluable for networking and collaboration. Moreover, contributing to open-source projects not only enhances one’s coding skills but also provides insight into real-world applications of ML in Rust. For example, developers can contribute to projects like rustlearn, a machine learning library written in Rust, thereby gaining hands-on experience and a deeper understanding of how to implement ML algorithms efficiently.

Integrating Rust into machine learning projects can be approached strategically. Developers should consider the performance-critical components of their applications where Rust’s strengths can be leveraged. For instance, when working with existing models developed in Python, Rust can be employed to optimize certain computationally intensive tasks. A common approach involves creating Rust libraries that can be called from Python using FFI (Foreign Function Interface). Below is a simple example demonstrating how to create a Rust function that can be called from Python:

#[no_mangle]

pub extern "C" fn add(x: f64, y: f64) -> f64 {

x + y

}

This Rust function can be compiled into a shared library and invoked within a Python script, enabling the integration of Rust’s performance benefits into ML workflows. Tools like PyO3 can facilitate the binding process, allowing seamless communication between Rust and Python.

In conclusion, preparing for the future of machine learning with Rust requires a multifaceted approach. Developers must build a robust foundation of skills and knowledge, embrace a mindset of continuous learning, and actively engage with the community to stay informed about the latest developments. By strategically integrating Rust into machine learning projects, developers can harness the full potential of this powerful language, paving the way for innovative and efficient ML solutions. As we advance into this exciting future, the intersection of machine learning and Rust presents a unique opportunity for developers to make impactful contributions to the field.

27.10. Conclusion

Chapter 27 equips you with the insights and tools necessary to leverage Rust in the rapidly evolving field of machine learning. By embracing Rust’s capabilities and staying ahead of emerging trends, you can lead the way in developing the next generation of ML systems, ensuring they are not only powerful but also safe, ethical, and scalable.

27.10.1. Further Learning with GenAI

The prompts encourage you to think critically about the integration of Rust in ML and to explore innovative approaches for building scalable, ethical, and high-performance ML systems.

Discuss the current state of machine learning and the challenges it faces. How does Rust address these challenges, particularly in terms of performance, safety, and concurrency? Implement a Rust-based ML solution that exemplifies these strengths.

Explore the key trends in machine learning, such as explainable AI, federated learning, and edge computing. How can Rust contribute to these trends, and what are the specific advantages it offers? Implement a Rust-based project that aligns with one of these trends.

Analyze the intersection of Rust and AI. How does Rust’s design support the development of AI applications, particularly in terms of managing complexity and ensuring safe execution? Implement an AI model in Rust that demonstrates these benefits.

Examine the role of Rust in quantum machine learning. How does Rust’s feature set support the development of quantum algorithms, and what are the potential applications of quantum ML in Rust? Implement a quantum machine learning algorithm in Rust.

Discuss the importance of privacy and data security in federated learning. How does Rust’s emphasis on safety and security make it an ideal choice for federated learning systems? Develop a federated learning model in Rust that prioritizes privacy and security.

Explore the growing demand for real-time machine learning applications at the edge. How does Rust’s performance and concurrency model make it suitable for deploying ML models on edge devices? Implement a real-time ML application in Rust for an edge computing scenario.

Analyze the evolution of Rust-based machine learning frameworks and libraries. How are community-driven development and open-source contributions shaping the Rust ecosystem for ML? Contribute to a Rust-based ML library or develop a new tool.

Discuss the role of ethics in AI development and how Rust’s design principles support the creation of ethical AI systems. Implement an AI model in Rust with built-in safeguards for fairness and transparency.

Explore the future directions of machine learning with Rust. What emerging trends and challenges should developers prepare for, and how can Rust help address these? Implement a cutting-edge ML project in Rust that aligns with these future trends.

Analyze the potential of Rust to become a primary language for AI and machine learning development. What are the key factors driving this shift, and what are the implications for the future of AI? Develop an AI application in Rust that showcases its strengths.

Discuss the challenges of integrating Rust into existing machine learning workflows. How can developers overcome these challenges, and what are the best practices for transitioning to Rust? Implement a Rust-based ML pipeline that integrates with existing tools.

Examine the potential of Rust in advancing explainable AI. How can Rust-based tools enhance the transparency and interpretability of AI models? Develop an explainable AI model in Rust and evaluate its effectiveness.

Explore the role of Rust in developing scalable machine learning systems. How does Rust’s performance and memory management capabilities contribute to building scalable ML solutions? Implement a scalable ML model in Rust for a large dataset.

Discuss the impact of Rust on the future of federated learning. How can Rust-based frameworks support the secure and efficient deployment of federated learning models? Implement a federated learning system in Rust that addresses these challenges.

Analyze the role of Rust in advancing real-time AI applications. How does Rust’s concurrency model support the development of AI systems that operate in real-time? Develop a real-time AI application in Rust and assess its performance.

Explore the future of quantum machine learning with Rust. How can Rust’s integration with quantum computing tools enable the development of quantum-enhanced ML models? Implement a quantum ML model in Rust and evaluate its potential.

Discuss the challenges of ensuring ethical AI development in the context of Rust. How can Rust’s design principles help mitigate ethical risks in AI, such as bias and lack of transparency? Implement an AI model in Rust with ethical safeguards.

Analyze the role of Rust in the future of edge computing for machine learning. How does Rust’s efficiency and low-level control make it ideal for deploying ML models on resource-constrained devices? Develop an edge ML model in Rust.

Explore the potential of Rust to drive innovation in AutoML. How can Rust-based tools and frameworks enhance the automation of ML model selection, tuning, and deployment? Implement an AutoML system in Rust and evaluate its capabilities.

Discuss the implications of Rust’s growth in the machine learning community. How is Rust changing the way ML systems are developed, and what does this mean for the future of the field? Contribute to a Rust-based ML project and reflect on its impact.

Each prompt encourages you to think critically about how Rust can be leveraged to address the challenges of modern machine learning, pushing the boundaries of what is possible. Embrace these challenges as opportunities to deepen your expertise, refine your skills, and position yourself as a leader in the rapidly evolving field of machine learning.

27.10.2. Hands On Practices

By completing these tasks, you will gain hands-on experience with the future-oriented aspects of machine learning, deepening your understanding of how Rust can be used to drive innovation and address the challenges of modern ML development.

Task:

Develop a scalable machine learning model in Rust that can handle large datasets efficiently. Focus on optimizing memory usage and performance to ensure the model scales effectively.

Challenges:

Experiment with different optimization techniques and assess their impact on the model's scalability and performance.

Task:

Implement a federated learning system in Rust, focusing on secure data handling and model training across distributed networks. Ensure that privacy-preserving techniques are integrated into the system.

Challenges:

Experiment with various federated learning strategies and evaluate their effectiveness in maintaining privacy while achieving accurate model results.

Task:

Create a real-time machine learning application in Rust for deployment on edge devices. Optimize the application for low-latency performance and efficient resource usage.

Challenges:

Explore different concurrency models and assess their impact on the application's real-time performance.

Task:

Contribute to an existing Rust-based machine learning library or develop a new library that addresses a specific ML challenge. Focus on creating scalable, efficient, and user-friendly tools.

Challenges:

Engage with the Rust ML community, gather feedback on your contributions, and iterate on your library to improve its functionality and usability.

Task:

Develop an explainable AI model in Rust, incorporating transparency and interpretability features such as feature importance analysis, model simplification, and user-friendly explanations.

Challenges:

Experiment with different explainability techniques and evaluate their effectiveness in making the model more understandable and trustworthy.

These exercises are designed to be challenging and in-depth, requiring you to apply both theoretical knowledge and practical skills in Rust. Embrace the difficulty of these exercises as an opportunity to refine your skills and prepare yourself for the future of machine learning with Rust.

Comments